In this tutorial, you will learn how to create a new topic using Kafka Command Line Interface(CLI).

If you are interested in video lessons then check my video course Apache Kafka for Event-Driven Spring Boot Microservices.

1. Open a new Terminal Window

To create a new Kafka topic, using CLI you will need to use a terminal window or command-line prompt.

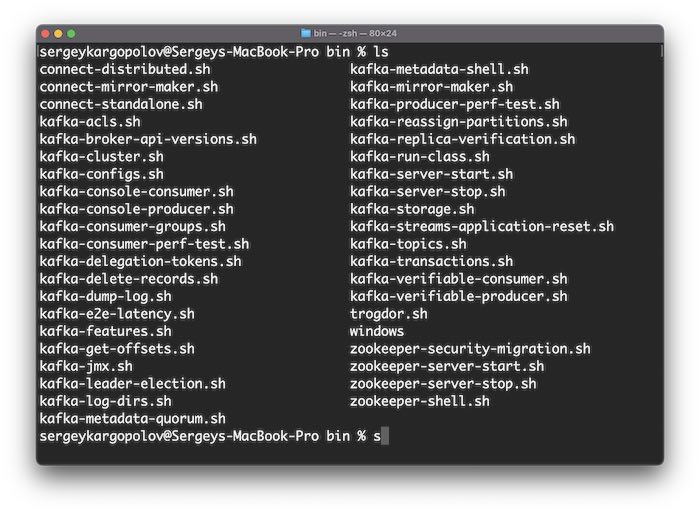

2. Change Directory to Kafka “bin” Folder

Once a terminal window is opened, change the directory to your Kafka folder.

Once you are inside a Kafka folder, list files there. You should see a “bin” folder. Kafka scripts are located in the “bin” folder.

If you list files in the “bin” folder. You will see a list of scripts you can use to work with Kafka.

Notice that all these scripts have extension .sh. This means that these files will work on Linux and Mac operating systems.

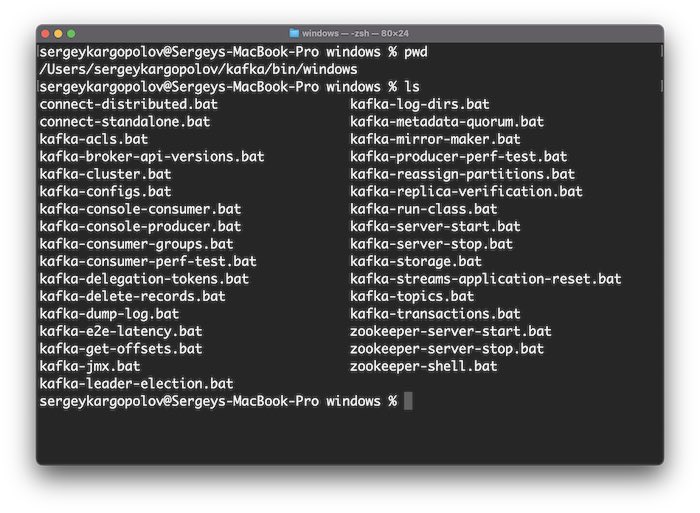

2.1 On Windows

If you are on Windows, then you should change the directory to a “windows” directory. It is also inside the “bin” folder.

If you list files here, then you should see the same Kafka scripts but with .bat file extension. These scripts should work on Windows.

3. Creating a new Kafka Topic

To create a new Kafka topic, I will use the following command.

./kafka-topics.sh --create --topic topic1 --partitions 3 --replication-factor 1 --bootstrap-server localhost:9092

The above command has several parameters that specify the properties of the topic, such as:

- –create: This tells Kafka that you want to create a new topic, not modify or delete an existing one.

- –topic topic1: This tells Kafka the name of the topic you want to create. You can choose any name you like, as long as it is unique and does not contain any special characters. In this case, I have chosen topic1 as the name.

- –partitions 3: This tells Kafka how many partitions you want to create for the topic. Partitions are smaller units of data that can be distributed across different servers or brokers. Having more partitions can improve the performance and scalability of your topic but also increase the complexity and overhead. In this case, I have chosen to create 3 partitions for the topic.

- –replication-factor 1: This tells Kafka how many replicas you want to create for each partition. Replicas are copies of the data in the partition that are stored on other brokers for fault tolerance. Having more replicas can improve the reliability and availability of your topic but also increase the storage and network usage. In this case, I have chosen to create 1 replica for each partition.

IMPORTANT: The number you specify in the –replication-factor parameter cannot be greater than the number of brokers you have in your Kafka cluster. So if you started only a one, single Kafka broker, then you cannot have a replication factor greater than 1. But if you start three brokers in a cluster, then you can specify replication factor 3. And each partition will have a copy in each broker. - –bootstrap-server localhost:9092: This parameter specifies a list of addresses of Kafka brokers in a Kafka cluster. Here, I can provide multiple servers separated by commas. And even if I have multiple servers running in the cluster, I can only provide the address of one initial server. If this initial server is healthy, the Kafka client will still be able to discover other servers and access all the topics and partitions. But if this initial broker is not healthy, if it is down or becomes unreachable, you will lose the connection and won’t be able to reconnect to the cluster until this broker is back online. This can cause your application’s data loss, latency, or unavailability issues. Therefore, it is recommended to provide the addresses of at least two brokers with the –bootstrap-server parameter so that you can have a backup broker in case one broker is unavailable.

Once you execute the above command, a new Kafka topic should be created.

4. Check if the topic is created successfully

You can use the– describe parameter to check if the topic is created successfully.

./kafka-topics.sh --bootstrap-server localhost:9092 --describe --topic topic1

Where topic1 is the name of the topic.

This will display information about the topic.

Topic: topic1 TopicId: jBusyL-vQLikWwQ9aG_V5w PartitionCount: 3 ReplicationFactor: 1 Configs: segment.bytes=1073741824

Topic: topic1 Partition: 0 Leader: 1 Replicas: 1 Isr: 1

Topic: topic1 Partition: 1 Leader: 1 Replicas: 1 Isr: 1

Topic: topic1 Partition: 2 Leader: 1 Replicas: 1 Isr: 1

This will confirm that the topic exists and that we were able to successfully create it.

Configuring In-sync Replicas(ISR)

When creating a new topic, you can also configure the minimum number of in-sync replicas (ISR) required for a broker to consider a write operation as successful. To configure the in-sync replicas at the topic creation time we use min.insync.replicas configuration property. For example:

./kafka-topics.sh --create --topic topic-insync --partitions 3 --replication-factor 3 --bootstrap-server localhost:9092 --config min.insync.replicas=3

What does it mean?

In Apache Kafka, a topic is divided into multiple partitions. Each partition is replicated across multiple brokers to ensure fault tolerance and high availability. The min.insync.replicas configuration specifies the minimum number of replicas that must acknowledge a write operation for it to be considered successful.

Imagine you have a topic with 3 partitions and a replication factor of 3. This means that each partition will be replicated across 3 brokers. When you produce a message to this topic, Kafka will write the message to one of the partitions. To ensure durability, Kafka requires that a minimum number of replicas (specified by min.insync.replicas) acknowledge the write operation before considering it successful.

For example, if you set min.insync.replicas=2, it means that at least 2 replicas must acknowledge the write operation for it to be considered successful. If fewer than 2 replicas are available or fail to respond, the write operation will fail.

In my example above, I am creating a topic named topic-insync with 3 partitions and a replication factor of 3. By setting --config min.insync.replicas=3, I am ensuring that all 3 replicas must acknowledge the write operation for it to be considered successful.

This configuration helps guarantee data durability and consistency in Apache Kafka by ensuring that writes are only considered successful when they meet the specified minimum number of in-sync replicas.

What is the best practice for creating topics in Kafka?

Creating topics in Kafka is not a one-size-fits-all solution. You need to follow some guidelines that can help you design and manage your topics effectively. Topics are the main way of organizing and categorizing the messages that you want to send and receive in Kafka. They have several properties that affect their performance, reliability and usability.

- Partitions: Partitions are smaller units of data that divide a topic into multiple segments. Each partition can be stored on a different server or broker, which allows you to distribute the load and increase the scalability of your topic. Partitions also enable parallel processing of messages by multiple producers and consumers. However, partitions also introduce some complexity and overhead. For example, you need to balance the load across brokers, maintain the order of messages within a partition, and handle failures and rebalances. Therefore, you should choose an appropriate number of partitions for your topic based on the expected throughput, scalability and parallelism of your producers and consumers. A common rule of thumb is to have at least as many partitions as the maximum number of consumers in a consumer group.

- Replication: Replication is the process of creating copies of the data in each partition on other brokers. Replication provides fault tolerance and high availability for your topic. It allows you to recover from broker failures and continue serving messages without data loss. Replication also improves the durability and consistency of your topic. It ensures that your messages are written to multiple brokers before being acknowledged. However, replication also increases the storage and network usage of your topic. It also increases the latency and complexity of producing and consuming messages. Therefore, you should choose an appropriate replication factor for your topic based on the desired reliability and availability of your data. A common rule of thumb is to have at least 3 replicas per partition and at least one more replica than the number of broker failures you want to tolerate.

- Names: Names are the identifiers that you use to refer to your topics in Kafka. Names are important for making your topics easy to understand and use by yourself and others. Names should reflect the purpose and content of your topics and be meaningful and consistent across your system. Names should also avoid using special characters or spaces, as they may cause issues with some clients or tools. Therefore, you should choose names for your topics that are clear, descriptive and unique.

These are some of the main aspects that you should consider when creating topics in Kafka. Of course, there may be other factors that depend on your specific use case and requirements, such as the retention policy, compression type, message format, etc.

Final words

I hope this tutorial was helpful to you. To learn more about Kafka, please check my other Apache Kafka tutorials.